GitHub repo templates are a good way to speed up the creation of new services that share a lot of boilerplate code. Since we have a lot of gRPC services to build, I’m going to start service development by building out a GitHub template for our gRPC services. I will start the template with the parts I know we’ll need, but as we build out more services, we will refine the template.

Why gRPC?

We have choices about how the services deployed to our k8s cluster communicate with each other. They can make REST calls between each other; there can be a pub/sub relationship between the services using queues like RabbitMQ, Kafka, or NATS. You can implement a message-passing interface with the same net/http giblets as REST, without, well…REST. You might establish a two way fully stateful TCP/IP connection between two services. We might explore this last one later on. “But that’s a blocking call you say?” Yes it is, but I’ve got ideas so stay tuned. Now gRPC lands among those options with advantages and disadvantages based on the implementation context.

I am using gRPC in this stretch project because I like gRPC and want to know that I am using it where its appropriate. I’m simply eliminating a potential bias. I chose it in past projects because it solved immediate scaling issues that cropped up in a high-priority project. I simply could not get the pub/sub model I was using to scale fast enough to get a processing job done within a rigid time constraint. I’m not saying I could not have eventually figured it out with queues. I’m not saying it wasn’t some type of skill issue on my part. I tried a gRPC-based approach to the problem, and it worked. I patted myself on the back and moved on. I continued implementing more gRPC services because it felt less clunky than using an internal REST API among the services. In short, I used it, liked it, and kept using it.

I’m out of the heat of battle in this nights and weekends project. I’d like to take the time to explore that decision in a more disciplined manner. I’m not going to regurgitate some chart I found somewhere else, we’re gonna build this out and find out on our own. First we have to get some of these services built then we need have to have functionality that is not trivial.

My Usual Approach

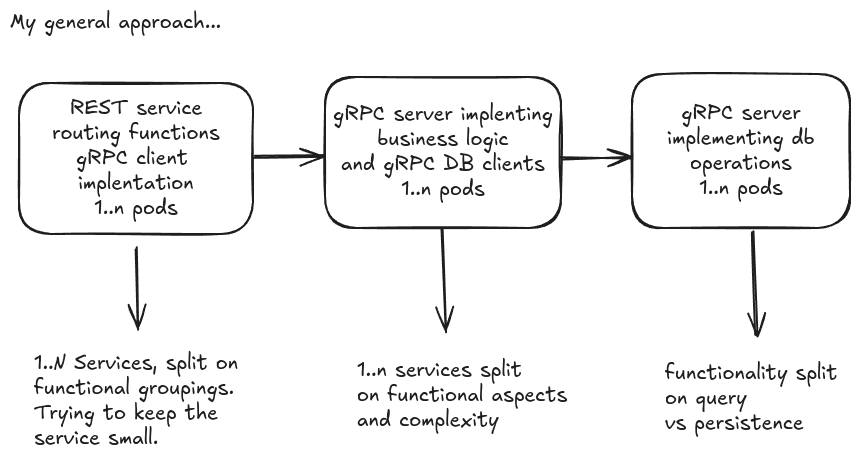

My road to gRPC started with breaking a monolithic application into more minor services for better implementation in a Kubernetes (k8s) cluster. My first cut split REST routing and database operations from each other. My first attempt was a little bloody without good separation of business concerns. I was forced to learn a hard lesson about that separation when I had two changes in database technology. That lesson’s result sent me down the road of a molecular structure. That’s the best analogy I can think of at the moment. Multiple molecules of HTTP/REST routing and authentication are in one service, business logic and complex operations are in their services, and database operations are in services.

This approach results in a three-atom molecule, as depicted in the figure below, which we will be replicating. We will create more molecules based on the workflow of our fictitious company. Our initial goal is to keep the services small. I intentionally avoid the term ‘micro-service’, as it implies a strict adherence to ‘single responsibility’ that I don’t want to limit myself to. While many would describe what I’m doing as building micro-services, I prefer to say that I’m building services that are as small as possible without creating more deployment issues due to excessive granularity. It’s a delicate balance that we’ll be navigating through experimentation.

The Template Features

- Communication Encryption we have three choices here, no encryption between services at all. We could choose have the server implement TLS which allows the client to be anonymous but the traffic is encrypted. We are choosing a third option. Both the client and server will implement TLS which would be one of the requirements for Zero Trust.

- Golang Standards Project Layout is not really a standard in the strictest ssnse but I follow it for the sake of consistency in my projects. I can point back to https://github.com/golang-standards/project-layout and say, that’s how the project is structured. It simplified my docs.

- A gRPC Setup shell script written to both document the setup process and automate it at the same time.

- Boilerplate Code already implemented for the server and client leaving only some names to be changed. A function for a health-check will be built out because each service will need to communicate its readiness to Argo CD.

Template Makefile

certs:

./scripts/grpc-server-cert.sh

grpc-setup:

./scripts/grpc-setup.sh

local-test-build:

./scripts/local-build.sh

protogen:

./scripts/gen-protobuf.sh

For the template Makefile I opted to stick with setup tasks initially. I will refine this as I build out more of these services.

- I included the cert script so I could regenerate the certs as necessary.

- A setup task basically just installs the go version of the gRPC protocol generator

- A basic Go build script for the server

- Then a protogen task that will read the protofile and generate the protocol buffer code when you change the protofile.

We’ll take a deeper look at these when I write about building one of these services out. I’ll hit on the gRPC setup and protogen steps a little here.

gRPC Setup

There’s not that much to it, we just need to get the protobuf compiler installed then we need the protoc go components installed

#!/bin/bash

# TODO: yeah I know this is specific to ubuntu and its gonna sudo prompt, one day make it OS agnostic

sudo apt install -y protobuf-compiler

go install google.golang.org/protobuf/cmd/protoc-gen-go@latest

go install google.golang.org/grpc/cmd/protoc-gen-go-grpc@latest

Once we have that, we can write a call to the protocol compiler that will read our protocol file and generate the protocol buffer code. Here is my protocol generator call for the application structure I’ve set up in the template.

protoc --go_out=pkg/protobuf --go_opt=paths=source_relative \

--go-grpc_out=pkg/protobuf --go-grpc_opt=paths=source_relative \

--proto_path=proto/ proto/app.proto

That is given a rough structure like this:

The Go Server Boilerplate

Typically I have some code that tunes the run parameters like GOMAXPROCS and whatnot. I stripped all of that out of the template. I think we want to rebuild that based on our experimentation.

// runServer will implement the grpc functions defined by the protocol buffer generation

func runServer(enableTLS bool, listener net.Listener) error {

var serverOptions []grpc.ServerOption

if enableTLS {

tlsCredentials, err := loadTLSCredentials()

if err != nil {

return fmt.Errorf("cannot load TLS credentials: %w", err)

}

serverOptions = append(serverOptions, tlsCredentials)

}

log.Printf("Start GRPC server at %s, TLS = %t", listener.Addr().String(), enableTLS)

return grpcServer.Serve(listener)

}

func main() {

port := flag.Int("port", 0, "the port for this service")

enableTLS := flag.Bool("tls", false, "enable SSL/TLS")

flag.Parse()

listener, err := net.Listen("tcp", fmt.Sprintf("0.0.0.0:%d", *port))

if err != nil {

log.Fatalf("failed to listen: %v", err)

}

err = runServer(*enableTLS, listener)

}

And the initial proto file looks like:

syntax = "proto3";

option go_package = "pkg/protobuf";

// -----vvvvvvvv template place holder...def need to change

service Template {

rpc CheckHealth(HealthRequest) returns (HealthReply) {}

}

message HealthRequest {

string wazzup = 1;

}

message HealthReply {

string waaazzzzup = 1;

}

Summary

That’s where I’ve gotten to in the project. Next, I will implement one of these services, which will allow us to refine this template. I will soon release a video to accompany this post. Check out my YouTube channel.

Leave a comment